- Foundation world models: new preprint online

- TEA Lab moves to Malachowsky Hall

Now found in Malachowsky 4100, with a brand new racing track coming soon!

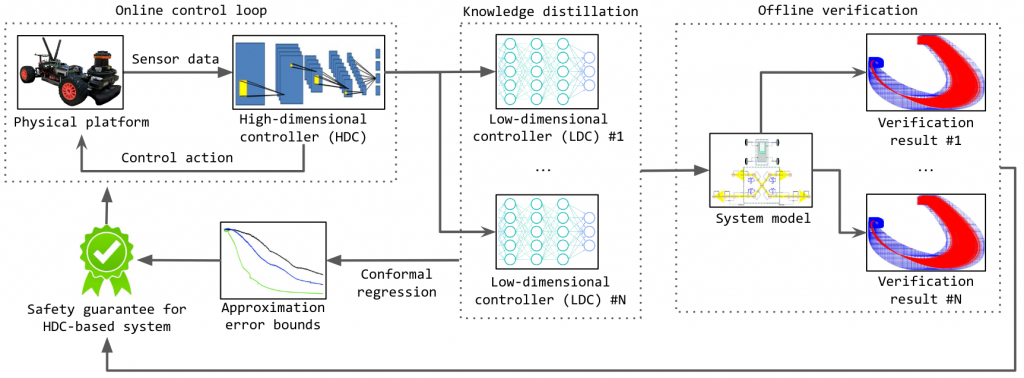

Now found in Malachowsky 4100, with a brand new racing track coming soon! - Verifying high-dimensional controllers: new preprint online

- Ivan participates in a panel on dependable space autonomy

- Ivan serves on the PC of ICCPS’24 and AAAI’24

Consider submitting your papers there.

Consider submitting your papers there. - How safe am I given what I see? New preprint online

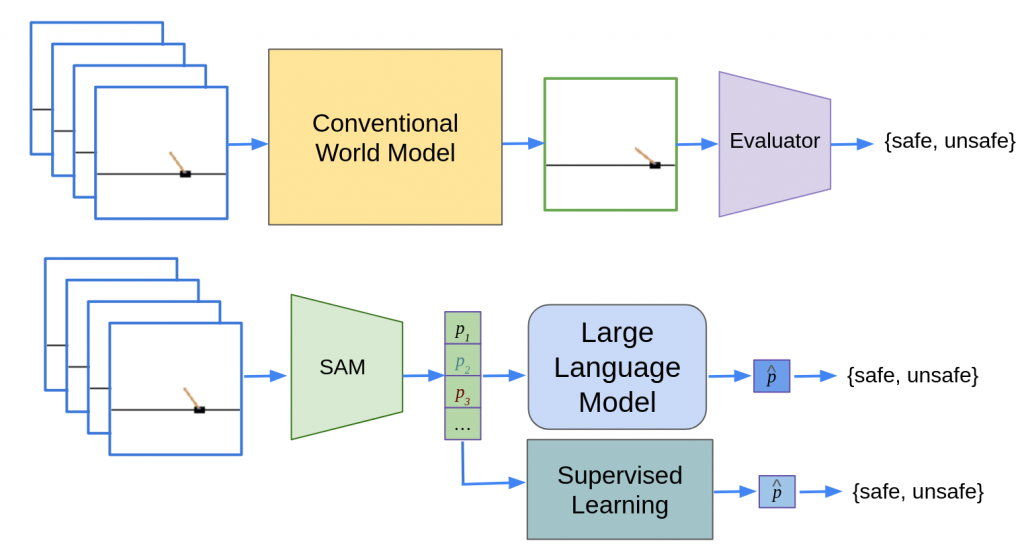

Update: a poster was presented at UF AI Days 2023. This paper develops safety chance prediction for image-controlled autonomous systems with calibration guarantees. Citation:

Update: a poster was presented at UF AI Days 2023. This paper develops safety chance prediction for image-controlled autonomous systems with calibration guarantees. Citation:- Zhenjiang Mao, Carson Sobolewski, Ivan Ruchkin. How Safe Am I Given What I See? Calibrated Prediction of Safety Chances for Image-Controlled Autonomy [arxiv]. Preprint, in submission.

- Invited talk at the DACPS workshop & ETH Autonomy Talks

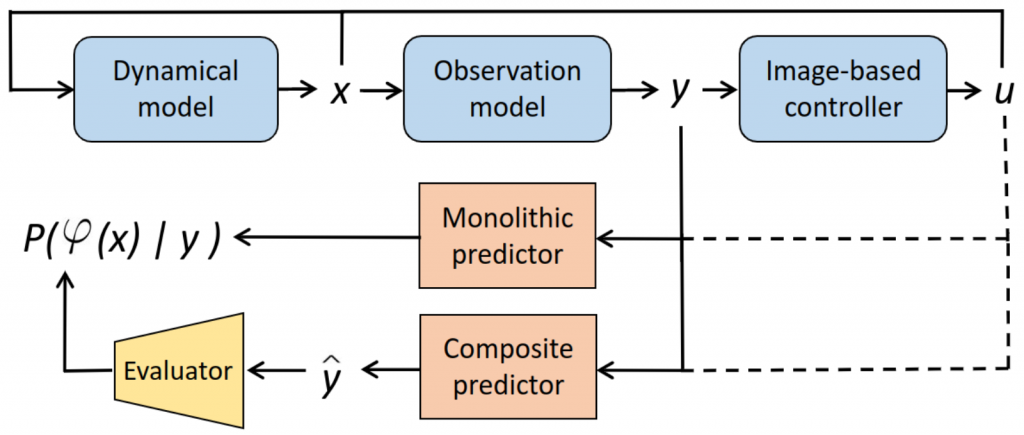

Update 1: an extended version of this talk was given at a UF MAE Affiliate Seminar. The recording can be found here (UF login required). Update 2: another version of this walk was given at the ETH Autonomy Talks (video). Update 3: yet another version of this talks was given as a CNEL Seminar. The talk titled “Verify-then-Monitor: Calibration Guarantees for Safety Confidence” (see the slides here) was presented at the Sixth International Workshop on Design Automation for Cyber-Physical Systems (DACPS), part of the Design Automation Conference (DAC) 2023. Abstract: Autonomous cyber-physical systems (CPS) are increasingly deployed in complex and safety-critical environments. To help CPS interact with such environments, learning-enabled components, such as neural networks, often implement perception and control functions. Unfortunately, the complexity of the environments and learning components is a major challenge to ensuring the safety of CPS. An emerging assurance paradigm prescribes verifying as much of the CPS as possible at design time – and then monitoring the probability of safety at run time in case of unexpected situations. How can we guarantee that the monitor produces a probability that is well-calibrated to the true chance of safety? This talk will overview our recent answers in two settings. The first combines Bayesian filtering with probabilistic model checking of Markov decision processes. The second is based on confidence monitoring of assumptions behind closed-loop neural-network verification.

Update 1: an extended version of this talk was given at a UF MAE Affiliate Seminar. The recording can be found here (UF login required). Update 2: another version of this walk was given at the ETH Autonomy Talks (video). Update 3: yet another version of this talks was given as a CNEL Seminar. The talk titled “Verify-then-Monitor: Calibration Guarantees for Safety Confidence” (see the slides here) was presented at the Sixth International Workshop on Design Automation for Cyber-Physical Systems (DACPS), part of the Design Automation Conference (DAC) 2023. Abstract: Autonomous cyber-physical systems (CPS) are increasingly deployed in complex and safety-critical environments. To help CPS interact with such environments, learning-enabled components, such as neural networks, often implement perception and control functions. Unfortunately, the complexity of the environments and learning components is a major challenge to ensuring the safety of CPS. An emerging assurance paradigm prescribes verifying as much of the CPS as possible at design time – and then monitoring the probability of safety at run time in case of unexpected situations. How can we guarantee that the monitor produces a probability that is well-calibrated to the true chance of safety? This talk will overview our recent answers in two settings. The first combines Bayesian filtering with probabilistic model checking of Markov decision processes. The second is based on confidence monitoring of assumptions behind closed-loop neural-network verification. - TEA Lab hosts K-12 students for the Robotics-AIoT Visit Day

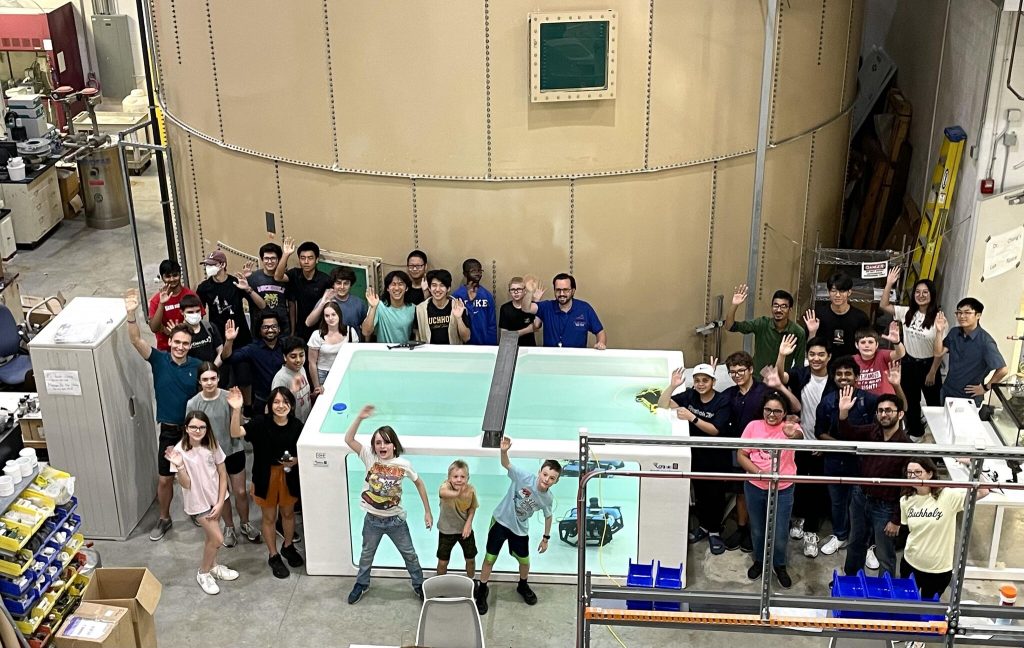

On June 15, 2023, the UF ECE Department hosted ~30 school students from the Westwood Middle School and Buchholz High School for a day visit at the Robotics, AI, and IoT research laboratories for educational presentations, research demonstrations, and mentoring discussions. It was a lot of fun for everyone!

Photos from the event Kudos to the other participating labs: SmartDATA Lab, RoboPI Lab, WISE Lab

- Causal NN controller repair presented at ICAA’23

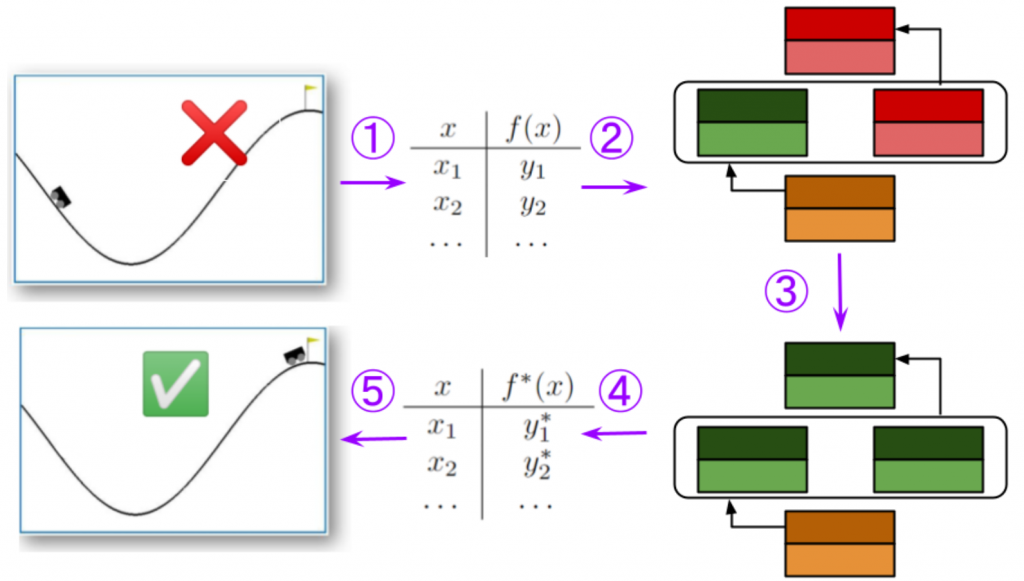

Shown above is a 5-step workflow of our causal repair: (1) Extract the behaviors of a learning component as an I/O table. (2) Encode the dependency of the desired property outcome on the I/O behaviors with a Halpern-Pearl model. (3) Search for a counterfactual model value assignment, revealing an actual cause and a repair. (4) Decode the found assignment as a counterfactual component behavior. (5) Replace the original learning component with a repaired component that performs this counterfactual behavior to fix the system. Citation:

Shown above is a 5-step workflow of our causal repair: (1) Extract the behaviors of a learning component as an I/O table. (2) Encode the dependency of the desired property outcome on the I/O behaviors with a Halpern-Pearl model. (3) Search for a counterfactual model value assignment, revealing an actual cause and a repair. (4) Decode the found assignment as a counterfactual component behavior. (5) Replace the original learning component with a repaired component that performs this counterfactual behavior to fix the system. Citation:- Pengyuan Lu, Ivan Ruchkin, Matthew Cleaveland, Oleg Sokolsky, Insup Lee. Causal Repair of Learning-Enabled Cyber-Physical Systems [ArXiv]. In Proceedings of the International Conference on Assured Autonomy (ICAA), Baltimore, MD, 2023.

- Conservative safety monitoring presented at NFM’23

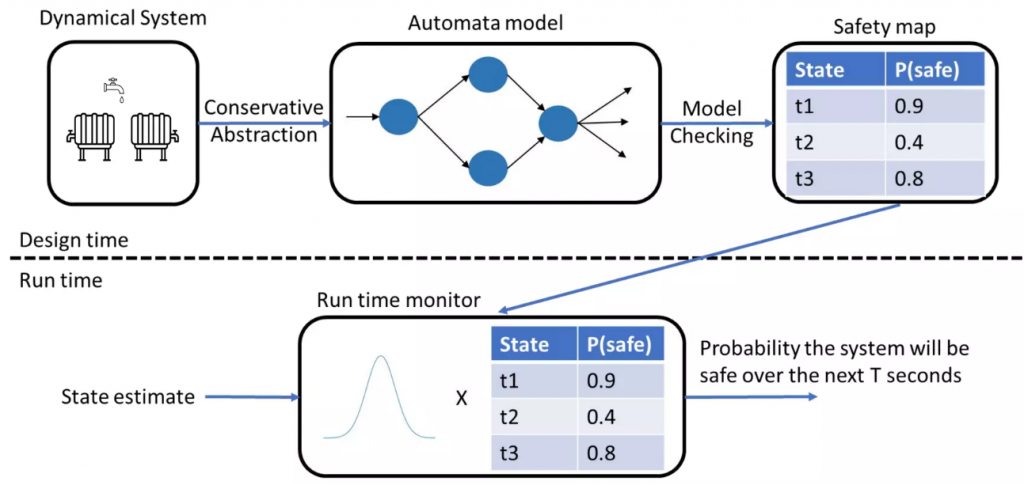

Shown above is our conservative monitoring approach that leverages probabilistic reachability offline and combines it with calibrated state estimation. Citation:

Shown above is our conservative monitoring approach that leverages probabilistic reachability offline and combines it with calibrated state estimation. Citation:- Matthew Cleaveland, Oleg Sokolsky, Insup Lee, Ivan Ruchkin. Conservative Safety Monitors of Stochastic Dynamical Systems [ArXiv] [Springer] [Slides]. In Proceedings of the NASA Formal Methods Symposium (NFM), Houston, TX, 2023.

- DonkeyCars are racing autonomously

Our lab is now running neural network-controlled racing cars based on raw camera images: Sometimes things don’t go as planned: Such is the brittle nature of deep learning. We’ll be working on predicting and preventing such accidents.

Our lab is now running neural network-controlled racing cars based on raw camera images: Sometimes things don’t go as planned: Such is the brittle nature of deep learning. We’ll be working on predicting and preventing such accidents. - Ivan Ruchkin to serve on the PC of ICCPS’23

- TEA Lab is established

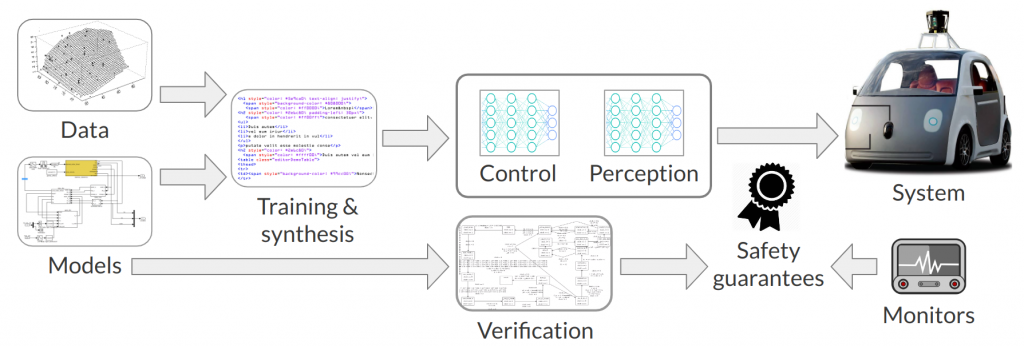

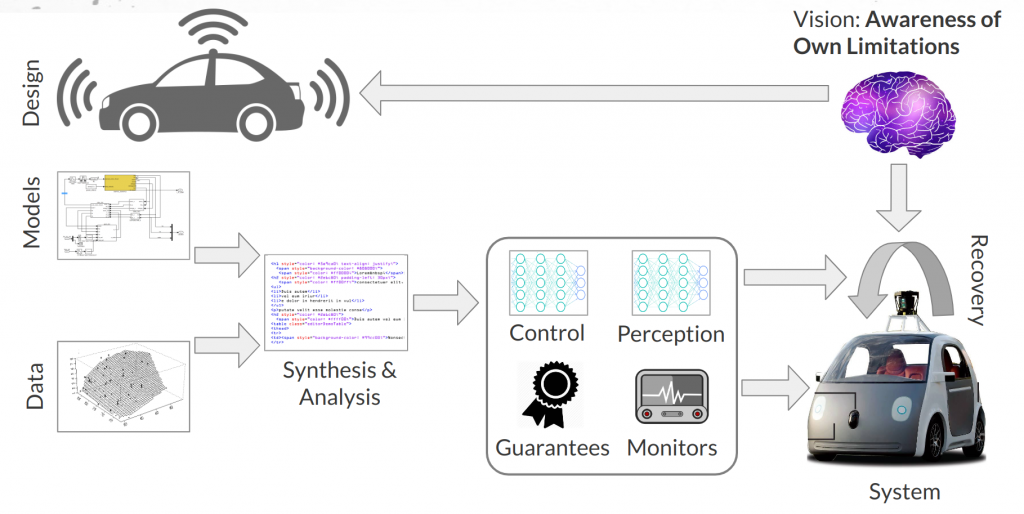

TEA lab’s mission is to develop engineering methodologies for safe autonomous systems that are aware of their own limitations, as illustrated above. More details about this vision can be found in this slide deck.

TEA lab’s mission is to develop engineering methodologies for safe autonomous systems that are aware of their own limitations, as illustrated above. More details about this vision can be found in this slide deck.