Update 1: an extended version of this talk was given at a UF MAE Affiliate Seminar. The recording can be found here (UF login required).

Update 2: another version of this walk was given at the ETH Autonomy Talks (video).

Update 3: yet another version of this talks was given as a CNEL Seminar.

The talk titled “Verify-then-Monitor: Calibration Guarantees for Safety Confidence” (see the slides here) was presented at the Sixth International Workshop on Design Automation for Cyber-Physical Systems (DACPS), part of the Design Automation Conference (DAC) 2023.

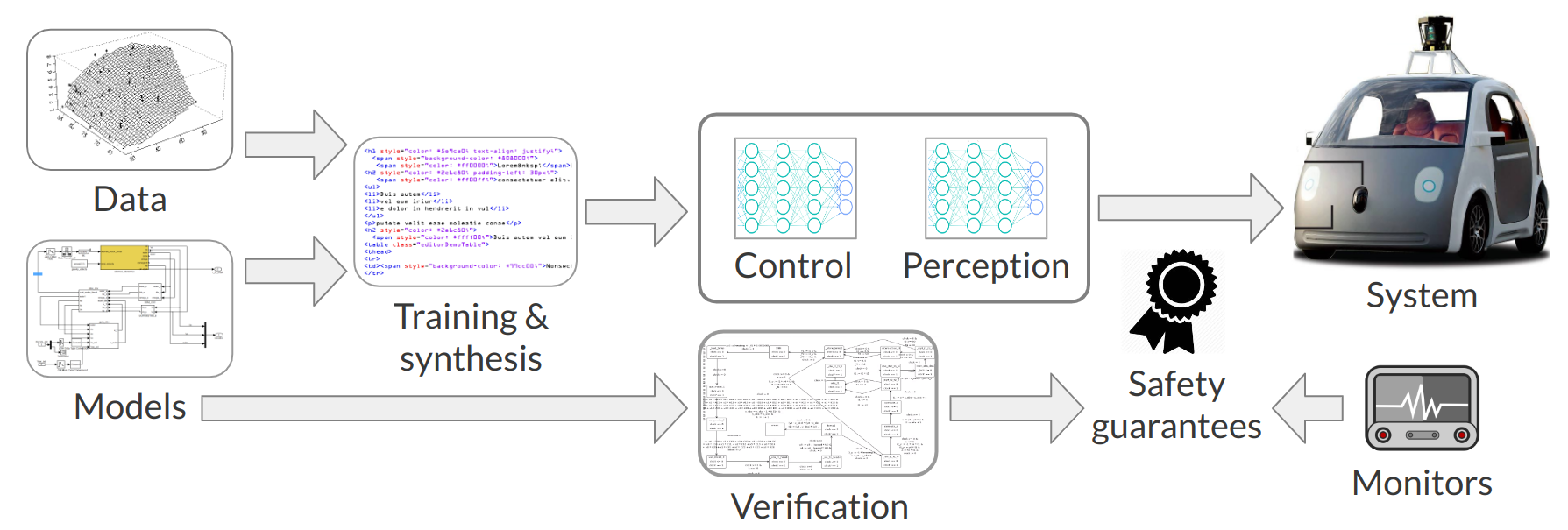

Abstract: Autonomous cyber-physical systems (CPS) are increasingly deployed in complex and safety-critical environments. To help CPS interact with such environments, learning-enabled components, such as neural networks, often implement perception and control functions. Unfortunately, the complexity of the environments and learning components is a major challenge to ensuring the safety of CPS. An emerging assurance paradigm prescribes verifying as much of the CPS as possible at design time – and then monitoring the probability of safety at run time in case of unexpected situations. How can we guarantee that the monitor produces a probability that is well-calibrated to the true chance of safety? This talk will overview our recent answers in two settings. The first combines Bayesian filtering with probabilistic model checking of Markov decision processes. The second is based on confidence monitoring of assumptions behind closed-loop neural-network verification.